Artificial Intelligence has swiftly become one of the most powerful allies in modern software development. From generating code snippets to automating debugging processes, AI assistants are transforming how developers create and maintain digital products. These innovations not only speed up workflows but also help teams achieve higher accuracy and efficiency than ever before. In today’s tech-driven environment, coding with AI assistance is reshaping roles, workflows, and skill sets within the software industry.

The Rise of AI Tools in Modern Software Development

AI-powered coding tools have grown rapidly with the integration of machine learning and natural language processing. These technologies enable developers to write better code by understanding intent, suggesting improvements, and detecting patterns. Tools like GitHub Copilot, Cursor, and ChatGPT-based plugins have become common in modern coding environments.

The appeal of these tools lies in their ability to reduce repetitive tasks and provide intelligent recommendations. As developers type, the AI suggests context-aware code snippets that match both syntax and logic. This not only speeds up development but also helps developers learn new frameworks and programming languages faster.

Furthermore, AI tools foster project accessibility by lowering the entry barrier for beginners. They act as interactive mentors, providing real-time insights and guidance on best practices. Over time, this evolution of AI-driven development tools will create a new balance between automation and human creativity in software engineering.

How AI Coding Assistants Boost Developer Productivity

One of the major advantages of AI coding assistants is their ability to accelerate the software development lifecycle. Developers can now generate prototype-level code or refactor existing logic in a fraction of the time. This improvement in productivity allows teams to focus more on design, testing, and user experience rather than mundane coding tasks.

AI assistants also help developers become more efficient in problem-solving. For instance, instead of spending hours researching an unfamiliar library or syntax issue, coders can instantly get viable solutions or code snippets. This immediate feedback loop reduces cognitive load and results in faster delivery times.

Teams using AI-assisted tools often notice a decrease in development bottlenecks, especially during large-scale projects. By automating documentation, test generation, and code suggestions, developers maintain higher momentum and consistent output, ensuring deadlines are met with fewer revisions.

Streamlining Code Reviews and Debugging with AI

Code reviews and debugging are traditionally time-consuming tasks that require meticulous attention. AI assistance streamlines these processes by detecting bugs, logical flaws, and performance issues in real time. These systems analyze entire codebases, comparing them with vast datasets of prior programming examples to identify potential problems early.

In code reviews, AI acts as a digital co-reviewer. It highlights vulnerabilities, security concerns, or redundant logic that might be missed in manual inspection. This ensures that issues are resolved before they reach production, improving software reliability and user trust.

Debugging is another area where AI truly shines. Instead of manually tracing errors, developers can rely on AI to suggest likely causes and solutions. It can even predict where future issues might occur based on historical data, saving both time and resources in the long run.

Improving Code Quality and Collaboration Using AI

Ensuring consistent code quality across teams is a significant challenge in software development. AI helps by enforcing coding standards, detecting anti-patterns, and recommending optimizations based on best practices. As a result, projects become more maintainable and easier to scale.

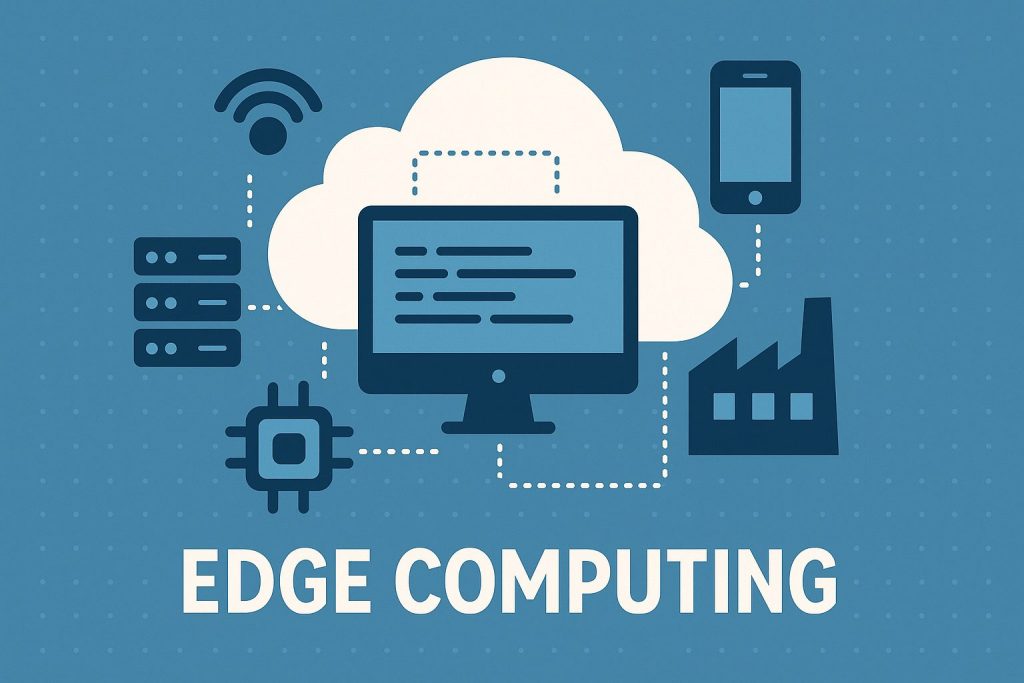

AI-based collaboration tools allow developers to work more efficiently, even in remote or distributed teams. By integrating smart assistants into version control systems, teams get real-time feedback on their commits, merge requests, and branch management. This automation reduces miscommunication and increases overall team alignment.

The synergy between human developers and AI fosters a more creative and cohesive workflow. Developers can focus on architectural decisions, user experience, and problem-solving while letting AI handle repetitive and error-prone tasks. This balanced dynamic enhances productivity without diminishing the human element of innovation.

The Future of Coding Jobs in the Age of AI Assistance

The rise of AI in coding doesn’t mark the end of human developers—it signals a shift in how coding will evolve. Developers equipped with AI tools gain more power, flexibility, and efficiency, allowing them to achieve more complex goals than before. Rather than replacing programmers, AI is creating new opportunities for specialized skills in training, tuning, and managing intelligent systems.

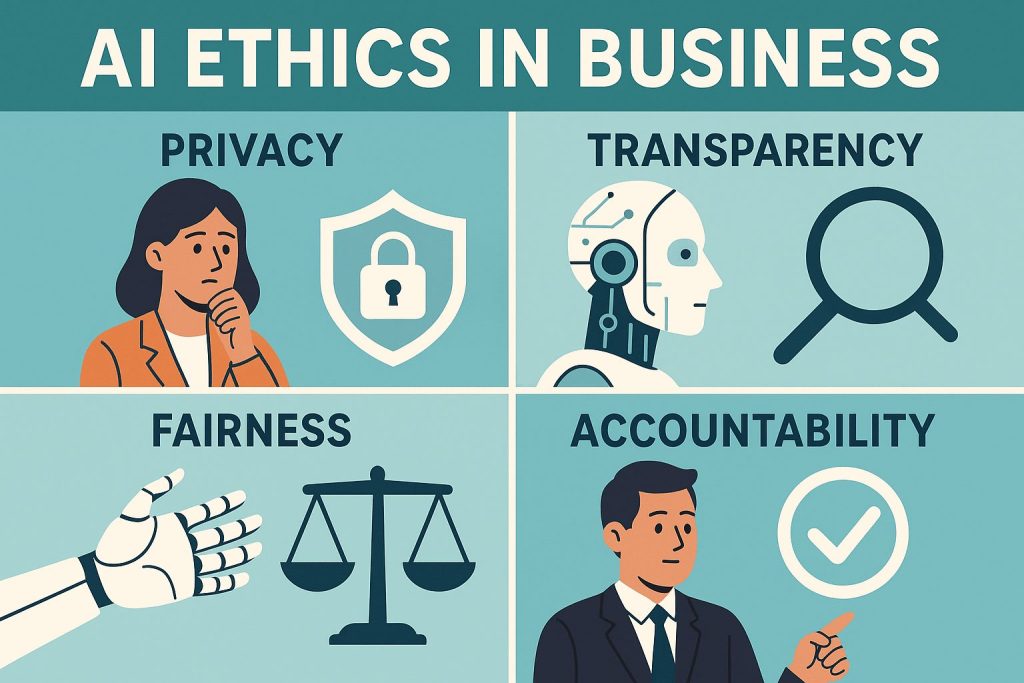

Future software engineers will likely spend less time writing raw code and more time orchestrating automated systems. Understanding how to effectively collaborate with AI assistants will become a critical skill. The ability to interpret AI output, validate logic, and apply ethical coding principles will distinguish top-tier developers from others.

Ultimately, the evolution of coding with AI assistance promises a more innovative and productive technological landscape. AI won’t replace creativity—it will enhance it, making the next generation of developers faster, smarter, and more efficient than ever.

Q and A

Q: Will AI replace human programmers completely?

A: No. AI assists in repetitive and predictive tasks, but creativity, problem-solving, and critical thinking remain uniquely human strengths.

Q: How can new developers benefit from AI coding tools?

A: New developers can learn faster, reduce syntax errors, and gain exposure to best practices by using AI tools that act as interactive mentors.

Q: What skills will be important in the AI-driven future of development?

A: Developers should focus on AI integration, data literacy, ethical coding, and continuous learning to stay relevant in the evolving tech landscape.

Artificial Intelligence is no longer a futuristic concept—it is a present force redefining how software is built and maintained. As AI coding assistants continue to evolve, developers will harness these tools to build smarter, more efficient applications. Embracing this partnership between human expertise and machine intelligence will ultimately shape a new era of innovation and opportunity in the world of programming.